2017-09-20

Sep 20 In-Class Exercise.

Hey Everyone,

Post your solutions to the Sep 20 In-Class Exercise to this thread.

Best,

Chris

Hey Everyone,

Post your solutions to the Sep 20 In-Class Exercise to this thread.

Best,

Chris

Below is a link to an abstract showing how to do it:

.

.

You can click the "Download PDF" and see equations 3, 4, and 5 with Fig. 1(b).

.

For boolean formulas, you can build always build a two layer perceptron with `n+1` neurons where `n` is the number of satisfying assignments for the formula.

.

Input Perceptrons:

.

For each satisfying assignment `x'`, you set `\theta` and the summation appropriately that only that single satisfying assignment makes the neuron's output `1` and all other inputs make it zero.

.

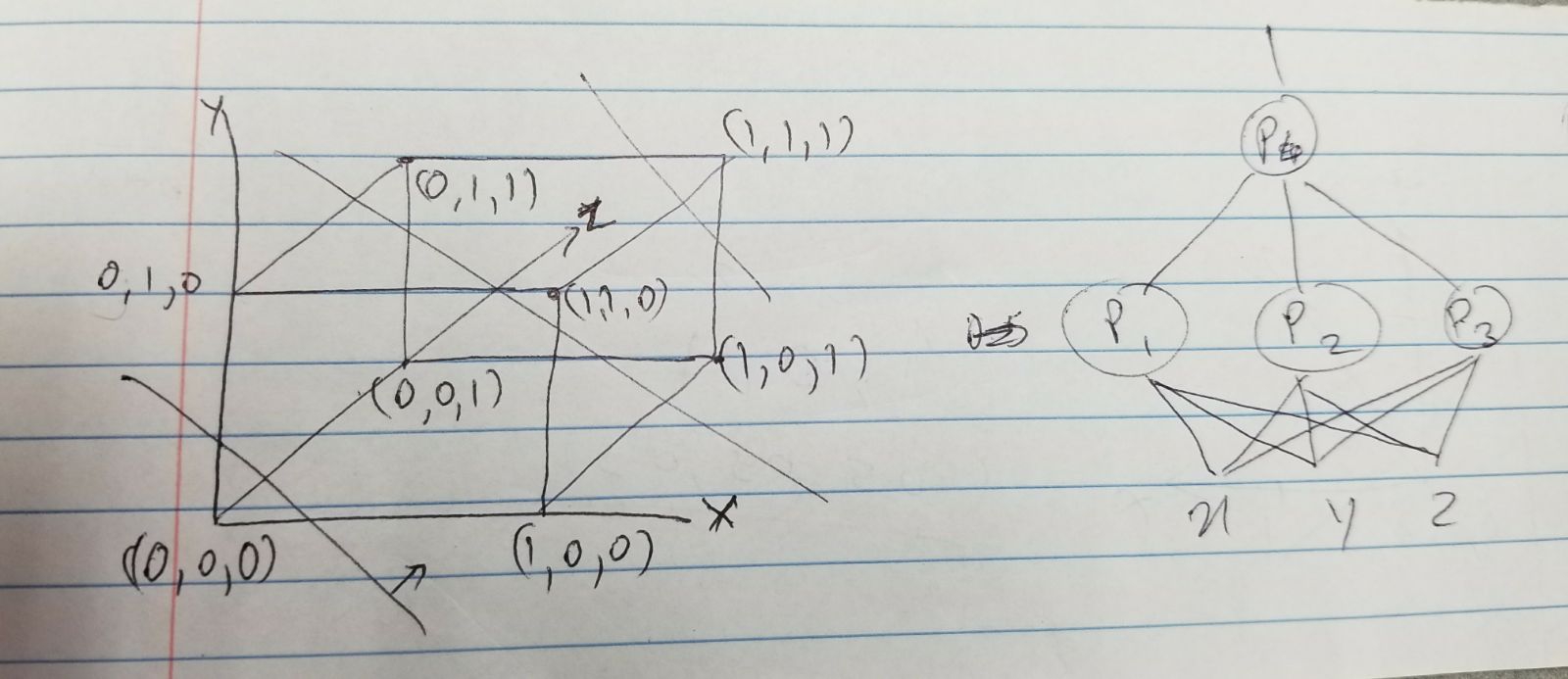

Example: `\vec{x} =<0,1,1>` is satisfying the perceptron for this assignment is:

.

`g_i = { (1 if -x_1 + x_2 + x_3 \geq 2), (0 mbox( otherwise.)) :}`

.

Output Perceptron: This is essentially an "OR" gate.

.

`y(\vec{g}) = { (1 if \sum_{i=1}^n g_i >0), (0 mbox( otherwise.)) :}`

(Edited: 2017-09-20) Below is a link to an abstract showing how to do it:

.

[[http://ieeexplore.ieee.org/abstract/document/1223966/|Solving parity-N problems with feedforward neural networks]]

.

You can click the "Download PDF" and see equations 3, 4, and 5 with Fig. 1(b).

.

For boolean formulas, you can build always build a two layer perceptron with @BT@n+1@BT@ neurons where @BT@n@BT@ is the number of satisfying assignments for the formula.

.

'''Input Perceptrons:'''

.

For each satisfying assignment @BT@x'@BT@, you set @BT@\theta@BT@ and the summation appropriately that ''only that single satisfying assignment'' makes the neuron's output @BT@1@BT@ and all other inputs make it zero.

.

Example: @BT@\vec{x} =<0,1,1>@BT@ is satisfying the perceptron for this assignment is:

.

@BT@g_i = { (1 if -x_1 + x_2 + x_3 \geq 2), (0 mbox( otherwise.)) :}@BT@

.

'''Output Perceptron: ''' This is essentially an "OR" gate.

.

@BT@y(\vec{g}) = { (1 if \sum_{i=1}^n g_i >0), (0 mbox( otherwise.)) :}@BT@

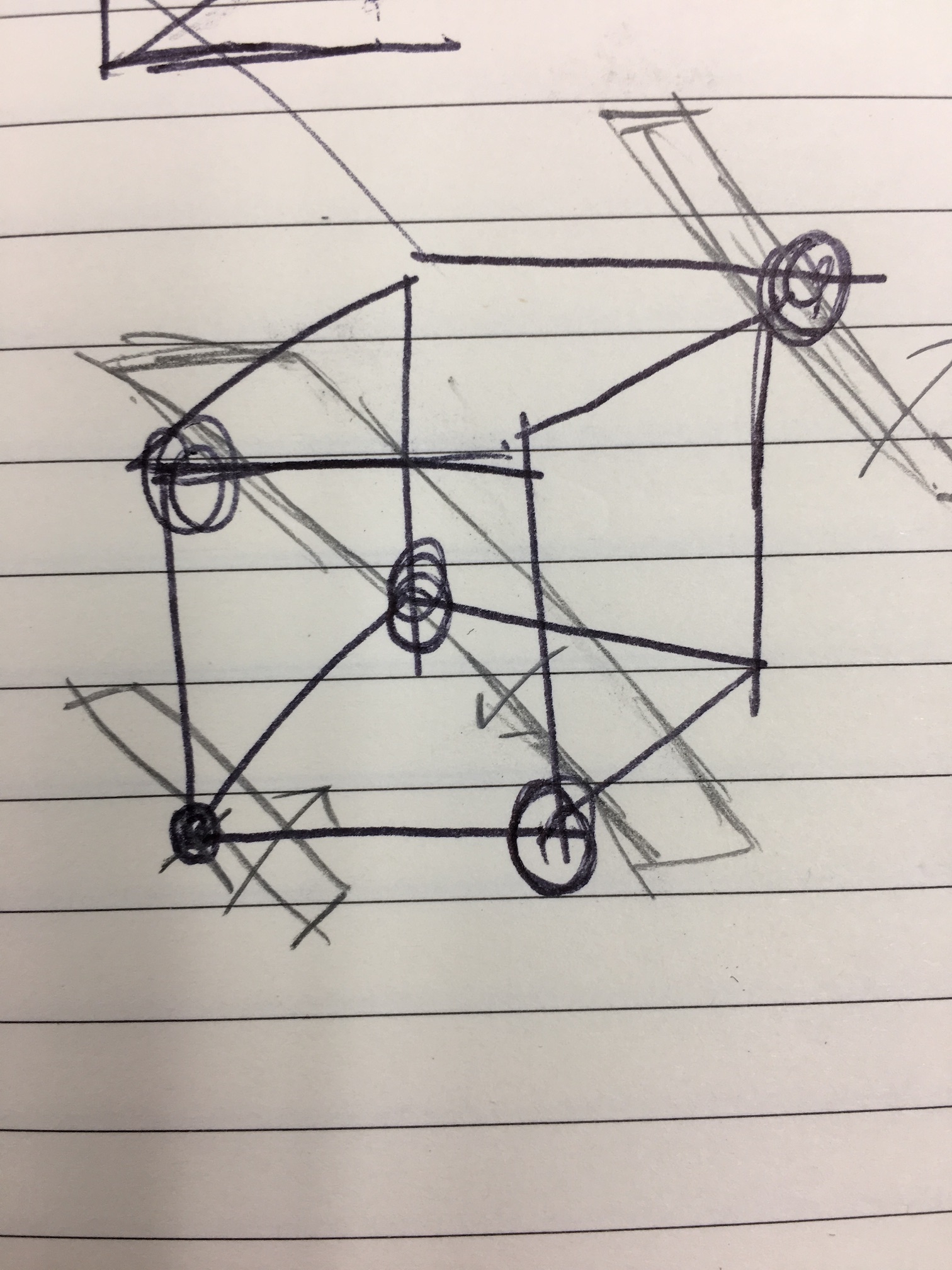

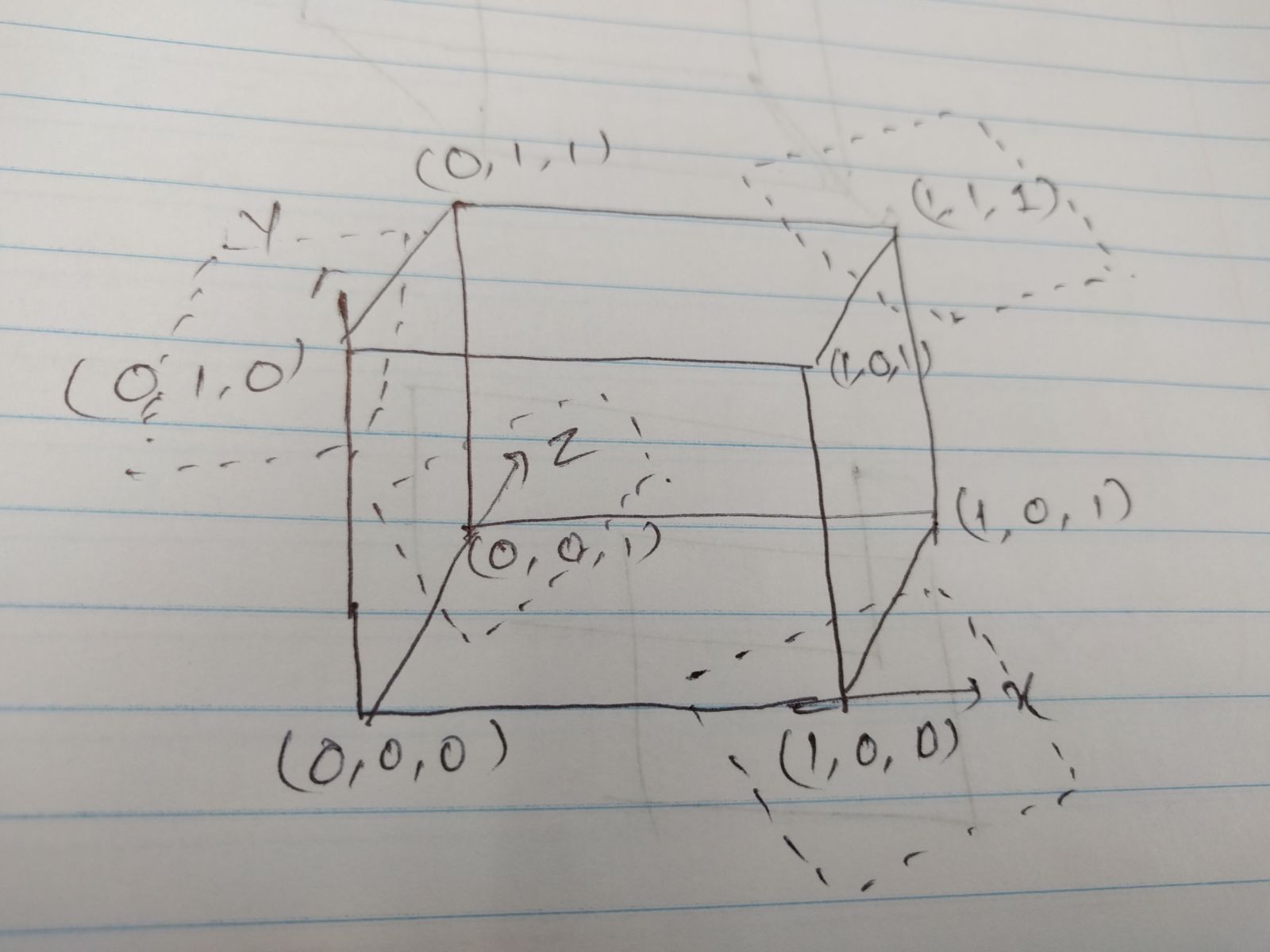

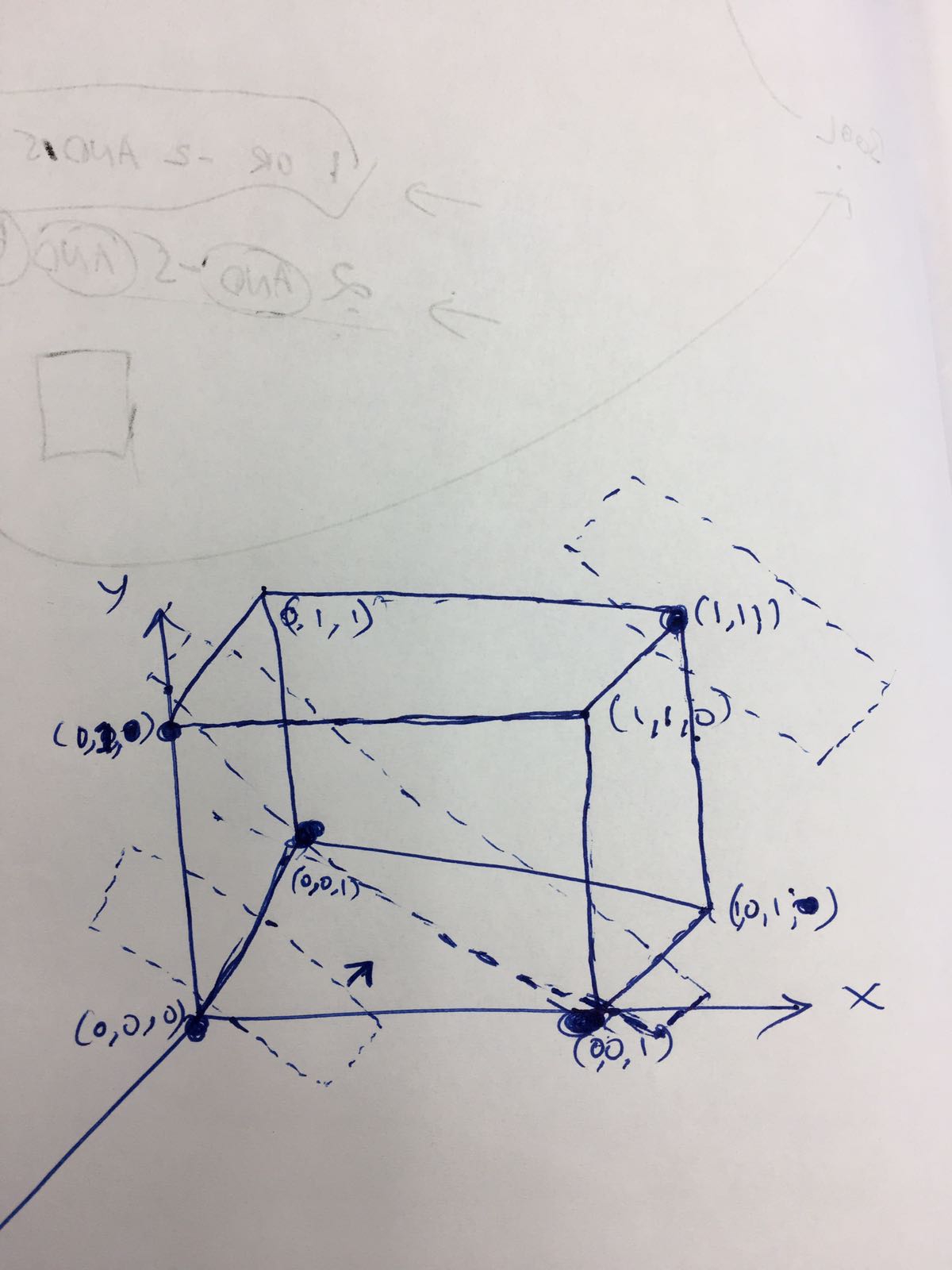

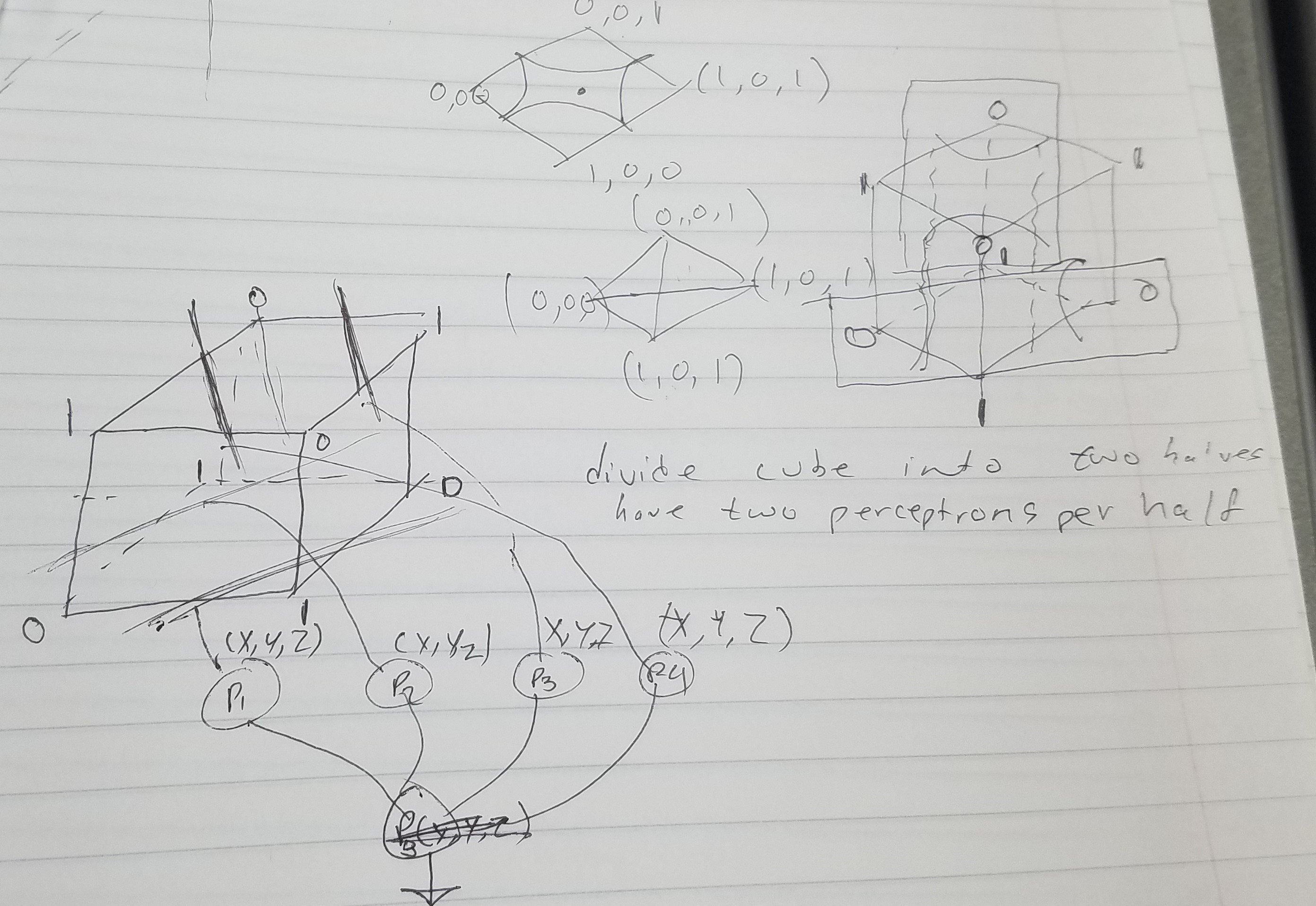

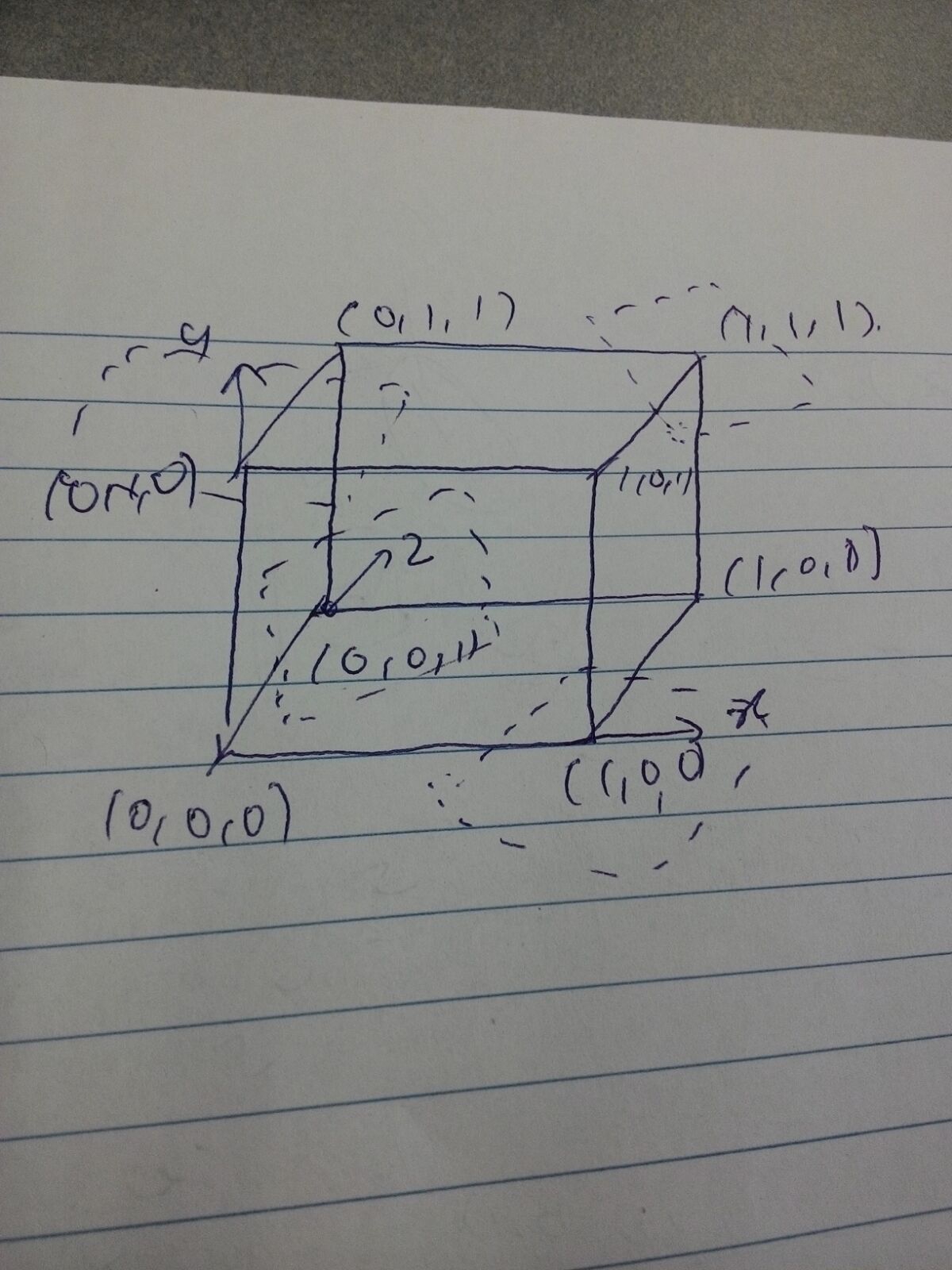

There are four points that we need:

001

010

100

111

For 111, P1 will have weights [1/3,1/3,1/3]

For 001, 010, we can have P2 as [-1, 1, 0]

For 100, we can have P3 as [1, -1, -1]

Now, a P4 with output from these three as inputs, with weights [1,1,1]

Set theta as 1

(Edited: 2017-09-20) There are four points that we need:

001

010

100

111

For 111, P1 will have weights [1/3,1/3,1/3]

For 001, 010, we can have P2 as [-1, 1, 0]

For 100, we can have P3 as [1, -1, -1]

Now, a P4 with output from these three as inputs, with weights [1,1,1]

Set theta as 1

(c) 2024 Yioop - PHP Search Engine