2017-10-30

2017-10-31

9) Write a python function leakyReluLayer(weights, inputs, biases, alpha) which will create a layer of TensorFlow Tensor object each compute leaky rectified linear units.

Solution:

import tensorflow as tf

def leakyReluLayer(weights, inputs, biases, alpha):

z = tf.matmul(weights, inputs) + biases

tf_0 = tf.zeros(shape=z.shape, dtype=tf.float32)

return tf.maximum(tf_0, z) + alpha*tf.minimum(tf_0, -z)

9) Write a python function leakyReluLayer(weights, inputs, biases, alpha) which will create a layer of TensorFlow Tensor object each compute leaky rectified linear units.

Solution:

import tensorflow as tf

def leakyReluLayer(weights, inputs, biases, alpha):

z = tf.matmul(weights, inputs) + biases

tf_0 = tf.zeros(shape=z.shape, dtype=tf.float32)

return tf.maximum(tf_0, z) + alpha*tf.minimum(tf_0, -z)

1.

from PIL import Image

import numpy as np

img = Image.open('bob.png')

img = img.convert('L')

img_array = np.fromstring(img.tobytes(), dtype = np.uint8)

img_arraay = nparray.reshape(img.size[1], img.size[0])

2.

You split the data set into training and test data several times and in several different ways, perform your measurements for each of these trials, and combine these measurements by computing an appropriate aggregation.

For exhaustive one, cycle over all possible p-subsets of the data sets, train on the data excluding the p-subset, test on the p-subset. This is called Leave-p-out cross validation. for non-exhaustive one, Randomly repeat m-times, choose a subset of size p as the test data set, train on the remaining data, test on this subset; this is called 'Repeated Random Sub-sampling Validation'

3

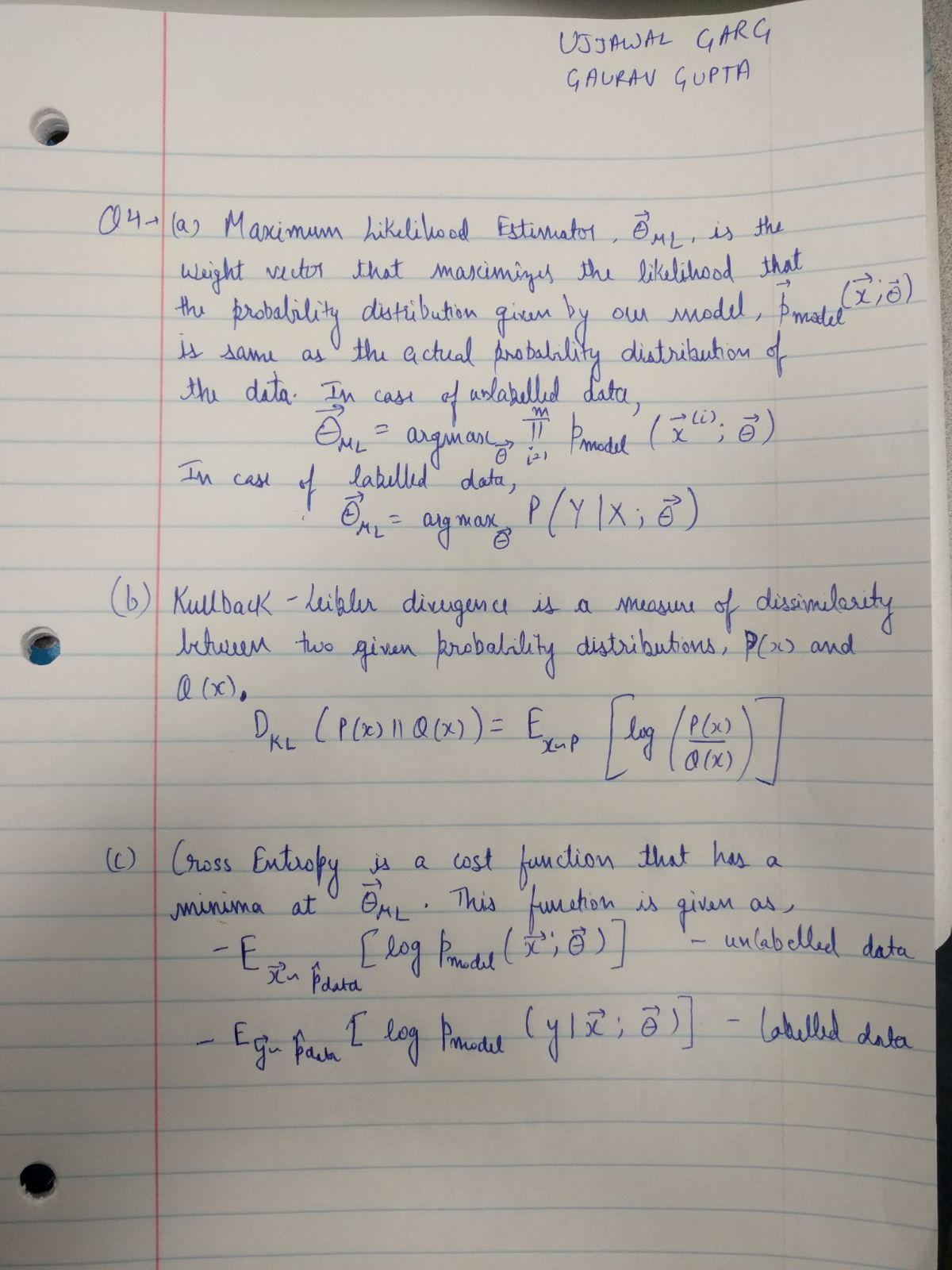

Kullback-Leibler, Is a measure of dissimilarity b/w two given prob distributions :

E[log(P(x)/Q(x)).

1.

from PIL import Image

import numpy as np

img = Image.open('bob.png')

img = img.convert('L')

img_array = np.fromstring(img.tobytes(), dtype = np.uint8)

img_arraay = nparray.reshape(img.size[1], img.size[0])

2.

You split the data set into training and test data several times and in several different ways, perform your measurements for each of these trials, and combine these measurements by computing an appropriate aggregation.

For exhaustive one, cycle over all possible p-subsets of the data sets, train on the data excluding the p-subset, test on the p-subset. This is called Leave-p-out cross validation. for non-exhaustive one, Randomly repeat m-times, choose a subset of size p as the test data set, train on the remaining data, test on this subset; this is called 'Repeated Random Sub-sampling Validation'

3

Kullback-Leibler, Is a measure of dissimilarity b/w two given prob distributions :

E[log(P(x)/Q(x)).

2017-11-02

NAme = Krishna Vojjila

import tensorflow as tf

def perceptron(weights,nodes,bias,activation):

z = weights*nodes + bias

return activation(z)

W = tf.Variable([0,1,0], dtype = float32)

X = tf.Placeholder(dtype = float32, shape = (1,3))

b = tf.Variable(0.3, dtype = float32)

a = tf.Sigmoid

layer1 = perceptron(W,X,b,a)

NAme = Krishna Vojjila

import tensorflow as tf

def perceptron(weights,nodes,bias,activation):

z = weights*nodes + bias

return activation(z)

W = tf.Variable([0,1,0], dtype = float32)

X = tf.Placeholder(dtype = float32, shape = (1,3))

b = tf.Variable(0.3, dtype = float32)

a = tf.Sigmoid

layer1 = perceptron(W,X,b,a)

(c) 2025 Yioop - PHP Search Engine