2017-11-19

Problem with the architecture ?.

Hi,

Can someone please let me know if you face this following problem while training? When we use sigmoid activation on the final output perceptron, it gives a value between 0 and 1. But, we want a value of either 0 (When the image is not in the class we are training for) or 1 (When the image belongs to the class we are training for). So, we run it through some sort of threshold and get an output of 0 or 1 and compare it to the actual y value for accuracy measure.

The problem here is that, when the sigmoid output is printed, the values are more or less the same. Hence, we get a neural network that only gives out a result of 0.

While testing on previously unseen test data, if the distribution of the test data is 80% images not belonging to the class and 20 % images belonging to the class, the accuracy f our model is now 80% because the model always gives a value of 0 which is bound to be true 80% of the time.

We tried various loss functions and we still get the same result.

Please let us know if someone is facing similar problem

Hi,

Can someone please let me know if you face this following problem while training?

When we use sigmoid activation on the final output perceptron, it gives a value between 0 and 1. But, we want a value of either 0 (When the image is not in the class we are training for) or 1 (When the image belongs to the class we are training for).

So, we run it through some sort of threshold and get an output of 0 or 1 and compare it to the actual y value for accuracy measure.

The problem here is that, when the sigmoid output is printed, the values are more or less the same. Hence, we get a neural network that only gives out a result of 0.

While testing on previously unseen test data, if the distribution of the test data is 80% images not belonging to the class and 20 % images belonging to the class, the accuracy f our model is now 80% because the model always gives a value of 0 which is bound to be true 80% of the time.

We tried various loss functions and we still get the same result.

Please let us know if someone is facing similar problem

Our group is also facing a similar behavior. The model predicts 0 every time and as the test distribution is 80% negative images, we get ~80% accuracy. Is it correct to train with 80% negative images and 20% positive samples? Wouldn't it make the model more biased to negative?

Our group is also facing a similar behavior. The model predicts 0 every time and as the test distribution is 80% negative images, we get ~80% accuracy. Is it correct to train with 80% negative images and 20% positive samples? Wouldn't it make the model more biased to negative?

For sure you can try to get the ratio of positive to negative example closer to 50/50.

For sure you can try to get the ratio of positive to negative example closer to 50/50.

Hi,

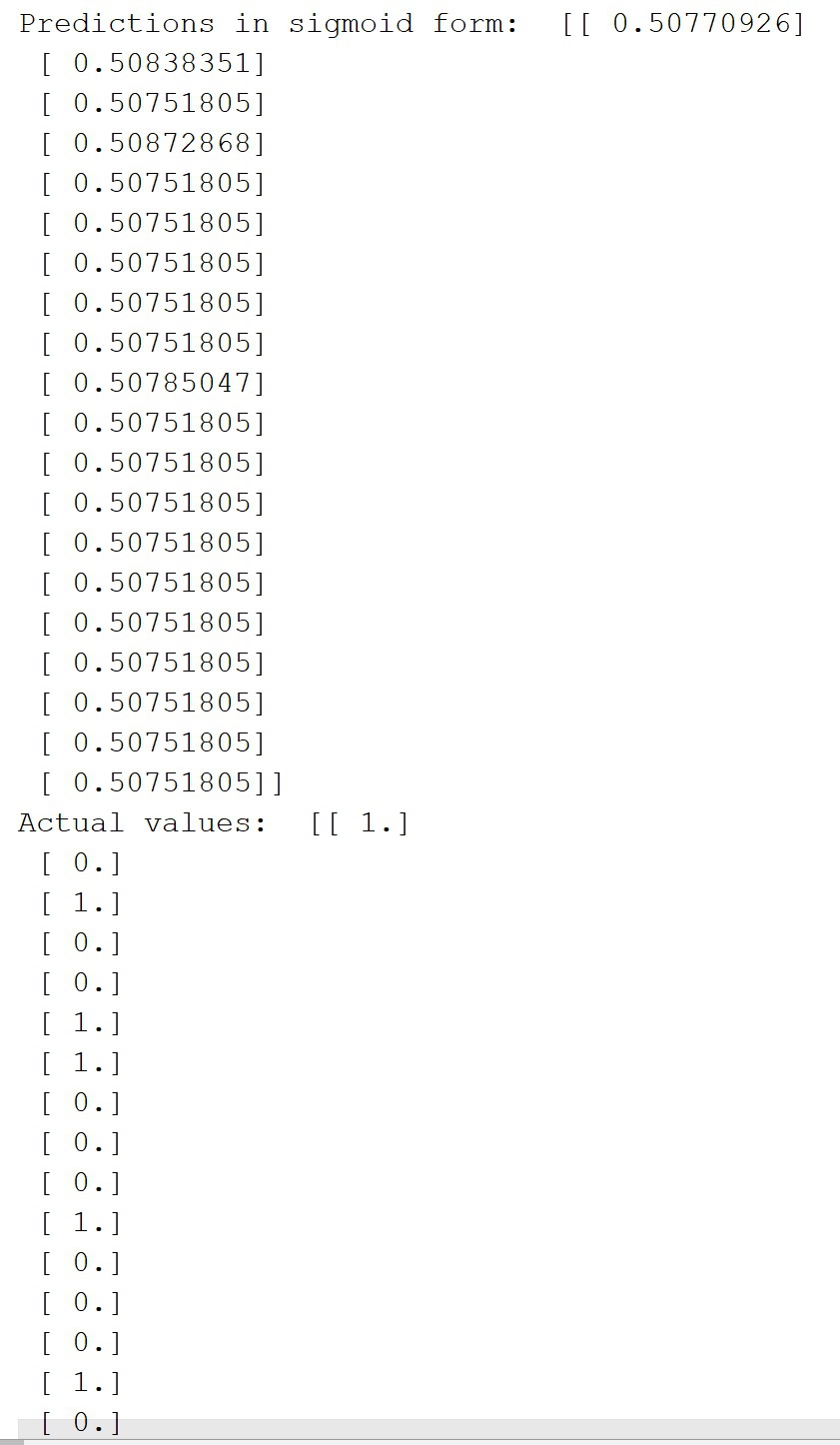

With 50-50 data I am getting 50% percent accuracy.I think the underlying issue is that the model is not training correctly. The sigmoid output remains the same irrespective of the image being fed in. In other words, there is no variation in activation. Please find below the output of the sigmoid layer from testing on 20 images and their actual values.

Hi,

With 50-50 data I am getting 50% percent accuracy.I think the underlying issue is that the model is not training correctly. The sigmoid output remains the same irrespective of the image being fed in. In other words, there is no variation in activation. Please find below the output of the sigmoid layer from testing on 20 images and their actual values.

((resource:WhatsApp Image 2017-11-19 at 7.13.06 PM.jpeg|Resource Description for WhatsApp Image 2017-11-19 at 7.13.06 PM.jpeg))

In the above result, 1 implies that the image belongs to the class being trained for and 0 implies that the image does not belong to the class. With 20 test images there are 6 1s in the above result and rounding off the sigmoid output gives 1 which implies that 30 percent of the time the model is correct.

In the above result, 1 implies that the image belongs to the class being trained for and 0 implies that the image does not belong to the class. With 20 test images there are 6 1s in the above result and rounding off the sigmoid output gives 1 which implies that 30 percent of the time the model is correct.

Have you tried a different activation function and seen if it makes a difference?

Have you tried two output neurons? 10 means in class, 01 out, and use softmax?

(Edited: 2017-11-19) Have you tried a different activation function and seen if it makes a difference?

Have you tried two output neurons? 10 means in class, 01 out, and use softmax?

Hi,

We tried binary clsssifier using [1,0] and [0,1] using softmax and the results are better. The neurons do not give the same value and there is variance in the intensity of the activation. The network seems to actually learn something.

Hi,

We tried binary clsssifier using [1,0] and [0,1] using softmax and the results are better. The neurons do not give the same value and there is variance in the intensity of the activation. The network seems to actually learn something.

(c) 2024 Yioop - PHP Search Engine