2021-08-29

Non-parametric way:

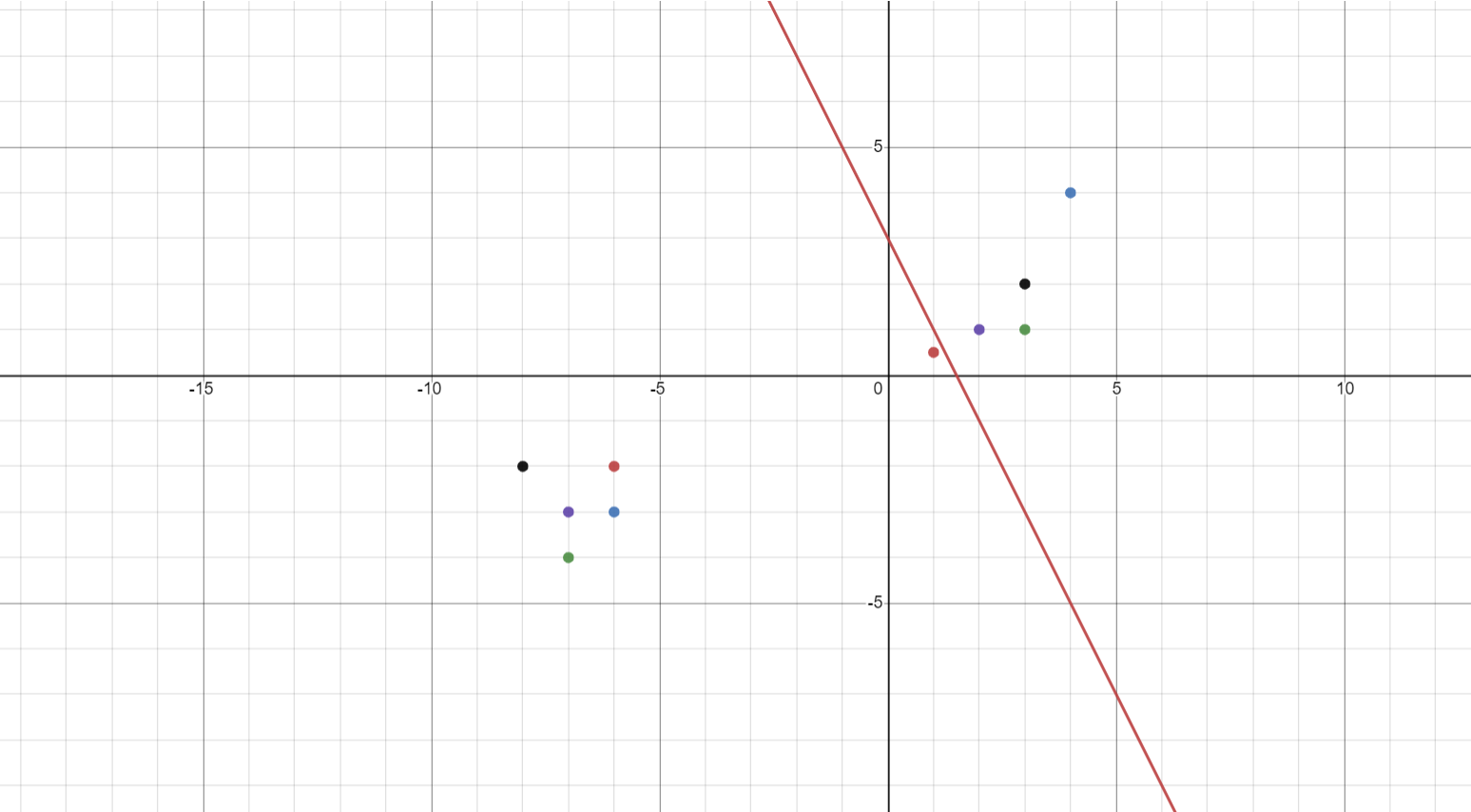

On a graph with X and Y axes, consider a line x=y with slope = 1, any coordinates below the line would be considered to be on the half-space region ((x,y),1) and coordinates above the line would not be in the half-space ((x,y),0)

Scenario 1: As marked in the figure, let us consider a set of points to distinguish the coordinates. Let’s use a KNN classifier with K=3 as a non-parametric learning classifier. If we look at point Q, by using the K nearest neighbor, the first 3 nearest points would be points K, I, and J. This classifies the training data as true, i.e. the point lies in the half-space.

Scenario 2: If we look at point P, by using the K nearest neighbor, the first 3 nearest points would be A, F, and B. This classifies the training data as false because though the nearest neighbors are situated in the non-half space region, it would still be classified as half-space.

Parametric way:

In the parametric learning method, the things that would influence the model for errors could be the precision of the parameters, i.e. when any parameter such as the slope of a line, or any coordinates has high precision it results in computational errors, resulting in incorrect classification of data.

(Edited: 2021-08-29) ((resource:ML Assignement.PNG|Resource Description for ML Assignement.PNG))

'''Non-parametric way:'''

On a graph with X and Y axes, consider a line x=y with slope = 1, any coordinates below the line would be considered to be on the half-space region ((x,y),1) and coordinates above the line would not be in the half-space ((x,y),0)

'''Scenario 1: '''As marked in the figure, let us consider a set of points to distinguish the coordinates. Let’s use a KNN classifier with K=3 as a non-parametric learning classifier. If we look at point Q, by using the K nearest neighbor, the first 3 nearest points would be points K, I, and J. This classifies the training data as true, i.e. the point lies in the half-space.

'''Scenario 2:''' If we look at point P, by using the K nearest neighbor, the first 3 nearest points would be A, F, and B. This classifies the training data as false because though the nearest neighbors are situated in the non-half space region, it would still be classified as half-space.

'''Parametric way:'''

In the parametric learning method, the things that would influence the model for errors could be the precision of the parameters, i.e. when any parameter such as the slope of a line, or any coordinates has high precision it results in computational errors, resulting in incorrect classification of data.

We can use k- nearest neighbors as a non-parametric way of telling if a point lies in the half plane or not.

Example of an incorrect KNN result:

Example: If we consider the line 2x + y = 3 shown in the above graph. The point (1, 0.5) lies outside of the half - plane but it will be labeled as a point within half plane because the k = 3 closest points to this point are within the plane.

Example of a correct KNN result:

The point (3, 1) gets correctly labeled as within the plane since it's k= 3 closest neighbors are also within the half plane.

If we choose a parametric way for learning the given space then we need to know the slope of the line which is given to us. If we don't have sufficient bits to represent the slope of the line then an inaccurate value will go as input to the model and can give errors in the result. For example: If slope of the line 0.256 and we write it as 0.26 (because we don't have enough bits to represent the whole value)then we are not giving a very accurate value as input to our model and it might result in errors.

The maximum size of the datatype that we are using for slope has an influence on errors.

(Edited: 2021-08-29) ((resource:In Class Fall.png|Resource Description for In Class Fall.png))

We can use k- nearest neighbors as a non-parametric way of telling if a point lies in the half plane or not.

Example of an incorrect KNN result:

Example: If we consider the line 2x + y = 3 shown in the above graph. The point (1, 0.5) lies outside of the half - plane but it will be labeled as a point within half plane because the k = 3 closest points to this point are within the plane.

Example of a correct KNN result:

The point (3, 1) gets correctly labeled as within the plane since it's k= 3 closest neighbors are also within the half plane.

If we choose a parametric way for learning the given space then we need to know the slope of the line which is given to us. If we don't have sufficient bits to represent the slope of the line then an inaccurate value will go as input to the model and can give errors in the result. For example: If slope of the line 0.256 and we write it as 0.26 (because we don't have enough bits to represent the whole value)then we are not giving a very accurate value as input to our model and it might result in errors.

The maximum size of the datatype that we are using for slope has an influence on errors.

((resource:AI_INCLASS_EXERCISE.pdf|Resource Description for AI_INCLASS_EXERCISE.pdf))

Let us consider the 2D space to be the plane above the line y = 0. Any point in the half-space is ((x, y), 1), and ((x, y), 0) if it is not.

Non-Parametric Approach :

Let us use the k-nearest neighbors (k-NN) method, which is a non-parametric classification method to classify the points. Let us take k=3 for this example.

Our k-NN classifier is trained on a dataset containing 11 points:

((3.5, 10.5), 1), ((5, 10), 1), ((7.5, 9), 1), ((8, 11), 1), ((9.4, 9.6), 1), ((10.3, 10.8), 1), ((6, -1), 0), ((7, -2), 0), ((8.5, -1.5), 0), ((8.5, -3), 0), ((10, -0.7), 0)

Correct classification : Here the point P1 (3, 7) will be classified as 1 because it is nearest to the three points (3.5, 10.5), (5, 10), (8, 11) and all points are in the half-space.

Incorrect Classification (Misclassification) : The point P2 (8, 1) will be misclassified as 0 because it is nearest to the three points (6, -1), (8.5, -1.5), (10, -0.7) and all are not in the half-space. Ideally, it should’ve been classified as 1 as it was above the line y=0 but has been misclassified by the k-NN algorithm.

Parametric Approach : Let us say that the actual line which divides the 2D half-space is actually y = 10^-33*x, due to limitations in storing the bits we approximate it to y = 0. Because of this, the point (10^33, 0.5) which is below the line y = 10^-33*x , gets misclassified as 1 because it is above the line y = 0, when it should’ve been classified as 0.

(Edited: 2021-08-30) Let us consider the 2D space to be the plane above the line y = 0. Any point in the half-space is ((x, y), 1), and ((x, y), 0) if it is not.

'''Non-Parametric Approach''':

Let us use the k-nearest neighbors (k-NN) method, which is a non-parametric classification method to classify the points. Let us take k=3 for this example.

((resource:graph-knn.PNG|Resource Description for graph-knn.PNG))

Our k-NN classifier is trained on a dataset containing 11 points:

((3.5, 10.5), 1), ((5, 10), 1), ((7.5, 9), 1), ((8, 11), 1), ((9.4, 9.6), 1), ((10.3, 10.8), 1), ((6, -1), 0), ((7, -2), 0), ((8.5, -1.5), 0), ((8.5, -3), 0), ((10, -0.7), 0)

'''Correct classification''': Here the point P1 (3, 7) will be classified as 1 because it is nearest to the three points (3.5, 10.5), (5, 10), (8, 11) and all points are in the half-space.

'''Incorrect Classification (Misclassification)''': The point P2 (8, 1) will be misclassified as 0 because it is nearest to the three points (6, -1), (8.5, -1.5), (10, -0.7) and all are not in the half-space. Ideally, it should’ve been classified as 1 as it was above the line y=0 but has been misclassified by the k-NN algorithm.

'''Parametric Approach''': Let us say that the actual line which divides the 2D half-space is actually y = 10^-33*x, due to limitations in storing the bits we approximate it to y = 0. Because of this, the point (10^33, 0.5) which is below the line y = 10^-33*x , gets misclassified as 1 because it is above the line y = 0, when it should’ve been classified as 0.

A non parametric algorithm that can be used is k nearest neighbors. Let us consider that the line x=1 as the division for half space. All the points with y greater than 1 will fall in the half space.

The points used to train KNN are ( (3,3), 1), ((4,3),1), ((4,10),1), ((3,6),1), ((5,10),1), ((3,-1),0)),((3,-5),0),((4,-5),0)),((5,-10)),0).

The points in the test dataset are P1((4,9)) and P2 ((4,0))

Let us consider the value of k as 3 . So, three nearest neighbors are taken into account while calculating the result.

Correctly Predicted

After calculating the euclidian distance the top 3 nearest neighbors to point P1(4,9) are ((3,6),1), ((5,10),1) and ((4,10),1) so the point P1 will be predicted correctly

Wrongly Predicted

After calculating the euclidian distance the top 3 nearest neighbors to point P2(4,0)are ((3,-1),-1), ((3,3),-1) and ((4,3),-1) so the point P2 will be predicted incorrectly

If value of k is 4 then P2 will also be predicted correctly

A non parametric algorithm that can be used is k nearest neighbors. Let us consider that the line x=1 as the division for half space. All the points with y greater than 1 will fall in the half space.

The points used to train KNN are ( (3,3), 1), ((4,3),1), ((4,10),1), ((3,6),1), ((5,10),1), ((3,-1),0)),((3,-5),0),((4,-5),0)),((5,-10)),0).

The points in the test dataset are P1((4,9)) and P2 ((4,0))

Let us consider the value of k as 3 . So, three nearest neighbors are taken into account while calculating the result.

'''Correctly Predicted'''

After calculating the euclidian distance the top 3 nearest neighbors to point P1(4,9) are ((3,6),1), ((5,10),1) and ((4,10),1) so the point P1 will be predicted correctly

'''Wrongly Predicted '''

After calculating the euclidian distance the top 3 nearest neighbors to point P2(4,0)are ((3,-1),-1), ((3,3),-1) and ((4,3),-1) so the point P2 will be predicted incorrectly

If value of k is 4 then P2 will also be predicted correctly

(c) 2025 Yioop - PHP Search Engine