2018-12-10

Final Practice .

Q4.

Team Member - Riti Gupta, Anand Vishwakarma

Q4.

Team Member - Riti Gupta, Anand Vishwakarma

((resource:final.pdf|Resource Description for final.pdf))

Problem 3 and 8: Group Priyanka, Anjitha, Neeraj Problem 3: Document Partitioning: An index partitioning scheme where each node holds an index for a subsetof documents in collection. This type of partitioning is called intra-query parallelism.

A hash value will be calculated of the documents and then the documentswill be assigned to each node based on the hash values. Eg. Document number [1,2,3] and their corresponding hash values are [9,7,9].

From the above example, all documents with hash 7 will be assigned to node1 and hash 9 to node 2. Term Partitioning:

Each node is responsible for a subset of postings lists of terms in theindex. This is a type of intra-query parallelism. Eg. All terms starting with a or b assigned to node 1, terms starting with c or d is assigned to node 2, etc. Node 1 -> index for apple, bark Node 2 -> index for car, cat Problem 8: Dangling node matrix:

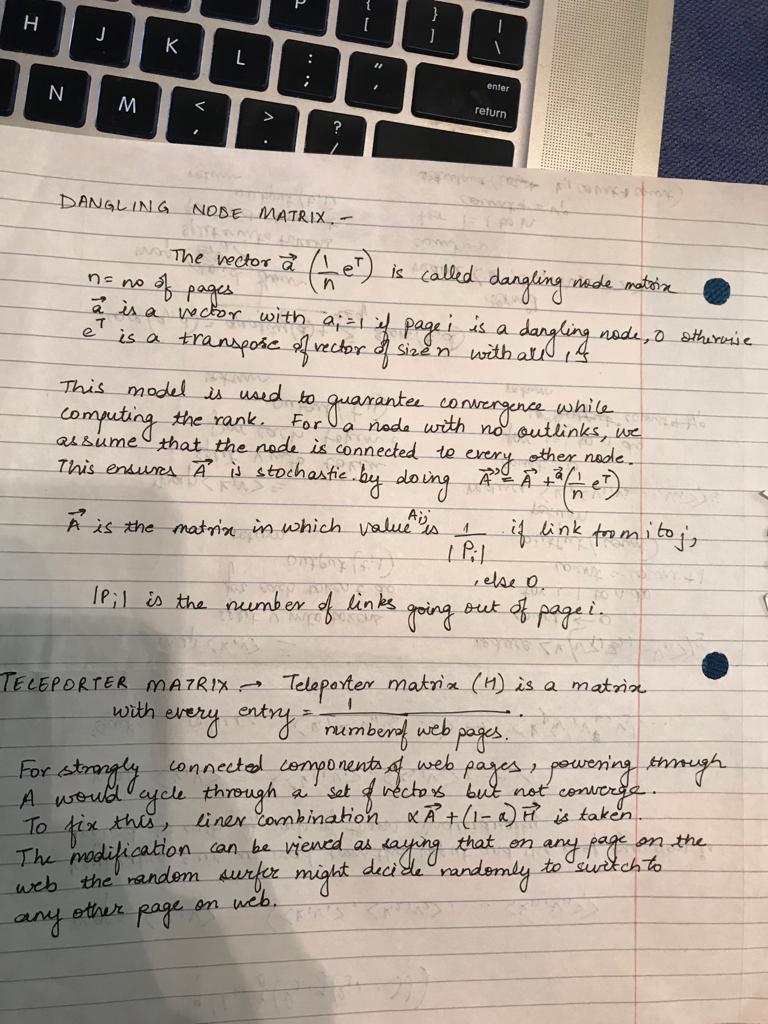

Dangling node matrix is used to ensure that the adjacency matrix used forPageRank calculations is row stochastic.

A node in the graph which has no outgoing links is called a dangling node.The presence of such a node will cause the adjacency matrix to not be stochastic. To correct this we assume that the dangling node has outgoing edges to all other nodes in the graph. Eg. Consider the following graph adjacency matrix [0 1 1]

[1 0 0] [0 0 0]The dangling node matrix is constructed using a*(1/n)*[eT] Where ai = 1 if i is a dangling node and 0 otherwise E is a vector of ones. For the above example a = [0 0 1]T And e is [1 1 1] The dangling node matrix is as follows: [0 0 0] [0 0 0] [0.33 0.33 0.33] Teleporter matrix:

Teleporter matrix is used to work with strongly connected components inthe graph.This is used to support a random surfer model where there is a possibility that a uses may give up the following links from a given page and visit a completely new page. The matrix is of size nxn with every element being 1/n. Eg. n = 3 the matrix is [0.33 0.33 0.33] [0.33 0.33 0.33] [0.33 0.33 0.33] (Edited: 2018-12-10)

<pre>

Problem 3 and 8: Group Priyanka, Anjitha, Neeraj

Problem 3:

Document Partitioning:

An index partitioning scheme where each node holds an index for a subset

of documents in collection. This type of partitioning is called intra-query parallelism.

A hash value will be calculated of the documents and then the documents

will be assigned to each node based on the hash values.

Eg. Document number [1,2,3] and their corresponding hash values are [9,7,9].

From the above example, all documents with hash 7 will be assigned to node

1 and hash 9 to node 2.

Term Partitioning:

Each node is responsible for a subset of postings lists of terms in the

index. This is a type of intra-query parallelism.

Eg. All terms starting with a or b assigned to node 1, terms starting with c or d

is assigned to node 2, etc.

Node 1 -> index for apple, bark

Node 2 -> index for car, cat

Problem 8:

Dangling node matrix:

Dangling node matrix is used to ensure that the adjacency matrix used for

PageRank calculations is row stochastic.

A node in the graph which has no outgoing links is called a dangling node.

The presence of such a node will cause the adjacency matrix to not be stochastic.

To correct this we assume that the dangling node has outgoing edges to all other

nodes in the graph.

Eg. Consider the following graph adjacency matrix

[0 1 1]

[1 0 0]

[0 0 0]

The dangling node matrix is constructed using a*(1/n)*[eT]

Where ai = 1 if i is a dangling node and 0 otherwise

E is a vector of ones. For the above example a = [0 0 1]T

And e is [1 1 1]

The dangling node matrix is as follows:

[0 0 0]

[0 0 0]

[0.33 0.33 0.33]

Teleporter matrix:

Teleporter matrix is used to work with strongly connected components in

the graph.This is used to support a random surfer model where there is a

possibility that a uses may give up the following links from a given page and

visit a completely new page. The matrix is of size nxn with every element being 1/n.

Eg. n = 3 the matrix is

[0.33 0.33 0.33]

[0.33 0.33 0.33]

[0.33 0.33 0.33]

</pre>

Q6) Team members: Jonathan, Shehba map(docid, document): for term in document: output(term, 1) reduce(termid, [v1, v2, ...]): num_occurrences = 0 for v_i in v: num_occurrences += v_i output(termid, num_occurrences * num_occurrences)

<pre>

Q6)

Team members: Jonathan, Shehba

map(docid, document):

for term in document:

output(term, 1)

reduce(termid, [v1, v2, ...]):

num_occurrences = 0

for v_i in v:

num_occurrences += v_i

output(termid, num_occurrences * num_occurrences)

</pre>

Q 2 and 9)

Team members: Avinash, Preethi

Q 2)

The basic DFR considers all the documents have the same length, given by

`(log(1+(l_t)/N)+f_(t,d) log(1+(N)/(l_t)))/(f_(t,d)+1)` ---- 1

Document Length Normalization is incorporated into 1 by adjusting the term frequency as computed by Amati and Rijsbergen as

`f'_(t,d)=f_(t,d)⋅log(1+(l_(avg))/(l_d)) ` -----2

Plugging in 2 in 1, we get the DFR equation as

`(log(1+(l_t)/N)+f'_(t,d) .log(1+ (l_(avg))/(l_d) ).log(1+N/(l_t)))/(( f'_(t,d) .log(1+ (l_(avg))/(l_d) ))+1)`

Here

`l_t` – total occurrences of the term in the collection

`l_(avg)` – Average length of the documents

N – Number of documents

`l_d` – Length of the document

`f_(t,d)` – Frequency of the term in the document

Q 9)

The only matrix used in HITS algorithm is the adjacency matrix L. From L and `L^T`, the symmetric positive semidefinite matrices `LL^T` and `L^TL` leads to convergence across iteration in HITS algorithm.

In SALSA, we normalize the rows of the adjacency matrix L to form `L_r` and also normalize the columns of L to form `L_c`. We then use `L_rL_c^T`, `L_c^TL_r` to form the hub matrix H and authority matrix A respectively. This normalization makes SALSA immune to topic drift.

Eg : L = `[[0,1,0,0],[1,0,1,0],[0,1,1,1],[0,0,0,0]]` is used as adjacency matrix in HITS

This is transformed to

`L_r` = `[[0,1,0,0],[1/2,0,1/2,0],[0,1/3,1/3,1/3],[0,0,0,0]]` and

`L_c` = `[[0,1/2,0,0],[1,0,1/2,0],[0,1/2,1/2,1],[0,0,0,0]]`

which is used in SALSA to compute the authority and hub vectors

(Edited: 2018-12-12) Q 2 and 9)

Team members: Avinash, Preethi

Q 2)

The basic DFR considers all the documents have the same length, given by

@BT@(log(1+(l_t)/N)+f_(t,d) log(1+(N)/(l_t)))/(f_(t,d)+1)@BT@ ---- 1

Document Length Normalization is incorporated into 1 by adjusting the term frequency as computed by Amati and Rijsbergen as

@BT@f'_(t,d)=f_(t,d)⋅log(1+(l_(avg))/(l_d)) @BT@ -----2

Plugging in 2 in 1, we get the DFR equation as

@BT@(log(1+(l_t)/N)+f'_(t,d) .log(1+ (l_(avg))/(l_d) ).log(1+N/(l_t)))/(( f'_(t,d) .log(1+ (l_(avg))/(l_d) ))+1)@BT@

Here

@BT@l_t@BT@ – total occurrences of the term in the collection

@BT@l_(avg)@BT@ – Average length of the documents

N – Number of documents

@BT@l_d@BT@ – Length of the document

@BT@f_(t,d)@BT@ – Frequency of the term in the document

Q 9)

The only matrix used in HITS algorithm is the adjacency matrix L. From L and @BT@L^T@BT@, the symmetric positive semidefinite matrices @BT@LL^T@BT@ and @BT@L^TL@BT@ leads to convergence across iteration in HITS algorithm.

In SALSA, we normalize the rows of the adjacency matrix L to form @BT@L_r@BT@ and also normalize the columns of L to form @BT@L_c@BT@. We then use @BT@L_rL_c^T@BT@, @BT@L_c^TL_r@BT@ to form the hub matrix H and authority matrix A respectively. This normalization makes SALSA immune to topic drift.

Eg : L = @BT@[[0,1,0,0],[1,0,1,0],[0,1,1,1],[0,0,0,0]]@BT@ is used as adjacency matrix in HITS

This is transformed to

@BT@L_r@BT@ = @BT@[[0,1,0,0],[1/2,0,1/2,0],[0,1/3,1/3,1/3],[0,0,0,0]]@BT@ and

@BT@L_c@BT@ = @BT@[[0,1/2,0,0],[1,0,1/2,0],[0,1/2,1/2,1],[0,0,0,0]]@BT@

which is used in SALSA to compute the authority and hub vectors

Team Members: Steven, Yaoyan

Q7)

OPIC uses a priority queue to keep pages arranged in order of importance. Importance is the money received for the page.

When a fetcher downloads pages and extracts the link, it evenly distributes the money amongst all links for that page. If the queue server gets from a fetcher a link already in its queue, it adds money that the fetcher gave to the url to the value the queue server has for the url.

Q10)

In PageRank algorithm, total three explicit steps need distributed file system (DFS) to store the PageRank field and

they are

1-3) after mapReduce computation of A * current_r, dangling correction * current_r, and teleporter* current_r,

all pairs (nid, node) are stored in DFS with computed PageRank values from each step;

For each mapReduce job, outputs from map functions will be stored into DFS to avoid possible loss.

(Edited: 2018-12-10) Team Members: Steven, Yaoyan

Q7)

OPIC uses a priority queue to keep pages arranged in order of importance. Importance is the money received for the page.

When a fetcher downloads pages and extracts the link, it evenly distributes the money amongst all links for that page. If the queue server gets from a fetcher a link already in its queue, it adds money that the fetcher gave to the url to the value the queue server has for the url.

Q10)

In PageRank algorithm, total three explicit steps need distributed file system (DFS) to store the PageRank field and

they are

1-3) after mapReduce computation of A * current_r, dangling correction * current_r, and teleporter* current_r,

all pairs (nid, node) are stored in DFS with computed PageRank values from each step;

For each mapReduce job, outputs from map functions will be stored into DFS to avoid possible loss.

Team Members: Tim, Neha, Tyler

Q5)

For a given query, it is first received by a main server, or a receptionist. Suppose that the query has multiple terms, t1 and t2. The receptionist will send t1 to the server that is responsible for t1 and t2 to the server that is responsible for t2. Each server will create its own accumulator list for their term. The receptionist receives the accumulator list from each server, creates a final accumulator set by with updated scores, and selects the top results from that.

Q9)

In both HITS and SALSA, we make use of an adjacency matrix L. For HITS, this L matrix is used to compute the hub score and authority score, via these given equations:

x^k=L.T*L*x^(k−1) (authority)

y^k=L*L.T*y^(k−1) (hub)

For SALSA, we actually generate two separate matrices, L_r and L_c, from the L matrix. In L_r, every row is row stochastic, e.g. all the row values sum to 1, and in the L_c matrix, every column is column stochastic. We compute the hub matrix H=L_r*L_c.T and the authority matrix A=L_c.T*L_c.

L = [[1, 1, 0], [1, 0, 1], [1, 0, 0]]

L_r = [[0.5, 0.5, 0], [0.5, 0, 0.5], [1, 0, 0]]

L_c = [[0.33, 1, 0], [0.33, 0, 1], [0.33, 0, 0]]

Team Members: Tim, Neha, Tyler

Q5)

For a given query, it is first received by a main server, or a receptionist. Suppose that the query has multiple terms, t1 and t2. The receptionist will send t1 to the server that is responsible for t1 and t2 to the server that is responsible for t2. Each server will create its own accumulator list for their term. The receptionist receives the accumulator list from each server, creates a final accumulator set by with updated scores, and selects the top results from that.

Q9)

In both HITS and SALSA, we make use of an adjacency matrix L. For HITS, this L matrix is used to compute the hub score and authority score, via these given equations:

x^k=L.T*L*x^(k−1) (authority)

y^k=L*L.T*y^(k−1) (hub)

For SALSA, we actually generate two separate matrices, L_r and L_c, from the L matrix. In L_r, every row is row stochastic, e.g. all the row values sum to 1, and in the L_c matrix, every column is column stochastic. We compute the hub matrix H=L_r*L_c.T and the authority matrix A=L_c.T*L_c.

L = [[1, 1, 0], [1, 0, 1], [1, 0, 0]]

L_r = [[0.5, 0.5, 0], [0.5, 0, 0.5], [1, 0, 0]]

L_c = [[0.33, 1, 0], [0.33, 0, 1], [0.33, 0, 0]]

Team members: Jonathan and Shehba

Problem 9

Salsa takes the Matrix L and normalizes it. HITS never normalizes the sum of columns or rows to make them sum to 1.

L

| 1 | 0 | 1 |

| 1 | 1 | 0 |

| 0 | 0 | 1 |

L_r

| 1/2 | 0 | 1/2 |

| 1/2 | 1/2 | 0 |

| 0 | 0 | 1 |

L_c

(Edited: 2018-12-10) | 1/2 | 0 | 1/2 |

| 1/2 | 1 | 0 |

| 0 | 0 | 1/2 |

Team members: Jonathan and Shehba

Problem 9

Salsa takes the Matrix L and normalizes it. HITS never normalizes the sum of columns or rows to make them sum to 1.

L

{|

|-

| 1 || 0 || 1

|-

| 1 || 1 || 0

|-

| 0 || 0 || 1

|}

L_r

{|

|-

| 1/2 || 0 || 1/2

|-

| 1/2 || 1/2 || 0

|-

| 0 || 0 || 1

|}

L_c

{|

|-

| 1/2 || 0 || 1/2

|-

| 1/2 || 1 || 0

|-

| 0 || 0 || 1/2

|}

Forrest Sun, Harita Shroff

Q:1 Explain in English what the P1 and P2 components used to derive the DFR formula mean. Explain how P2 is calculated.

- P1 represents the probability that a random document d contains exactly ft,d occurrences of t.

- P2 is related to eliteness and compensates for this rapid change. A document is said to be elite for the term t when it

"about" the topic associated with the term.

- To estimate P2, Laplace's law of succession can be used.

P2 = ft,d/ft,d+1

Forrest Sun, Harita Shroff

Q:1 Explain in English what the P1 and P2 components used to derive the DFR formula mean. Explain how P2 is calculated.

- P1 represents the probability that a random document d contains exactly ft,d occurrences of t.

- P2 is related to eliteness and compensates for this rapid change. A document is said to be elite for the term t when it

"about" the topic associated with the term.

- To estimate P2, Laplace's law of succession can be used.

P2 = ft,d/ft,d+1

(c) 2026 Yioop - PHP Search Engine